User stories are not requirements

Written by Jane Orme

We often hear, “Aren’t our requirements just our user stories?”.

This often occurs when a product’s goal or intended use changes, or its complexity, size, or team changes. Team members can no longer remember precisely what the product does or how it works. They know that all the features they worked on have been recorded as user stories, so they assume it is going to be simple to extract the requirements from those.

Unfortunately, it usually isn’t so straightforward.

In this post, we discuss some of the challenges and some of the ways to avoid this happening.

Contents:

- What is the difference between user stories and requirements?

- How do people often approach requirements?

- The problems with tacking on requirements to user stories

- How to cut the complexity of handling requirements and user stories

What is the difference between user stories and requirements?

Before we begin, let’s look at the difference between a user story and a requirement.

User stories:

- Describe the features from users’ perspectives. Uses everyday language, making it understandable by all stakeholders.

- Ensure user needs are met.

- Encourage conversations. Stories are a starting point for gathering information and defining the requirements.

- Are typically discarded when the work is completed, in Agile at least.

- Describes why the product should do what it does, rather than how.

Requirements:

- Describe overall what the product does (or should do) and can include how.

- Ensure the product quality is good.

- Ensure technical feasibility.

- Describe functional and non-functional specifications. These can include details of performance criteria, technical needs, data structures, and algorithms.

- Might include the user needs as separate requirements.

- Are gathered and formatted in an easy-to-read way, describing the overall current (or intended) solution.

How do people often approach requirements?

One Agile approach people commonly take is to try to store their requirements in their workflow management tool. The user stories and the code are thought to describe the specifications, so they don’t see the need to store them anywhere else.

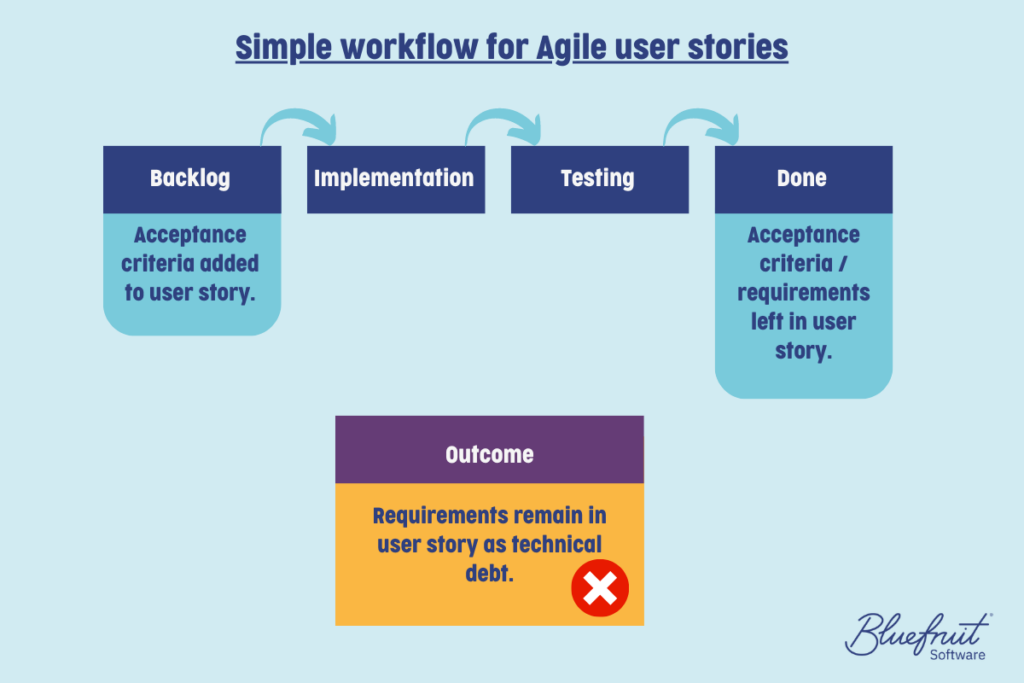

When the user story is in the backlog waiting to be developed, requirements are added to it, often in the form of acceptance criteria. Developers and testers use these as the rules to code and test against.

When the user story is done, these requirements are left within the user story, and the focus moves on to the next story. Because teams plan to keep their user stories, they know they will have a record of what was added and when. This saves time.

However, the requirements left within user stories become a type of technical debt, which ultimately causes other issues which outweigh the time savings. Within a user story, it is much more challenging to review, approve, and control the requirements.

The problems with tacking on requirements to user stories

Mixed messages

Keeping requirements spread through your work management tool might work okay for short-term projects. But it becomes a problem when people need to review all requirements. If requirements are buried within years’ worth of user stories, it is hard to gather them all. For larger and legacy products, recovering all this information and working out which are still relevant can be a huge and complex task.

Requirements often evolve as a project progresses. Some might become redundant, and others are deprecated as improvements are added. Some user stories are never started or completed. This adds to the complexity.

Without a single source of truth, it is difficult to work out what specifically was in each software version.

Trickier knowledge sharing

If you want to speed up knowledge transfer, having a single location (or document) containing all the relevant and current information is best. It also improves traceability. Stakeholders or developers want information about what the product does and won’t want to spend ages hunting through kanban boards to find out.

They also don’t want to read outdated user stories and mistake them for the current implementation. This is an ineffective communication format and is a barrier for less technical people. If you can’t avoid using user stories to manage requirements, ensure they have a unique ID and are linked to related tests, defects, and any user stories it is deprecating.

Typically, documents and requirement management tools have better readability, structure, and accessibility.

Hindering quality

Tests are linked to user stories but not necessarily requirements since some user stories can cover multiple requirements. Not having a single source of truth can make it harder to create a regression suite with good test coverage, and harder to verify that all requirements are well-tested.

In sectors like medtech, it’s important to ensure that requirements are validated and verified. When making changes in minor releases requiring regression testing, you can save time by doing an impact analysis and running a subset of tests. This is easier with an organised requirements list.

Adding version control to requirements helps ensure quality – formalising approvals and preventing accidental edits. But doing this to user stories doesn’t make sense.

How to cut the complexity of handling requirements and user stories

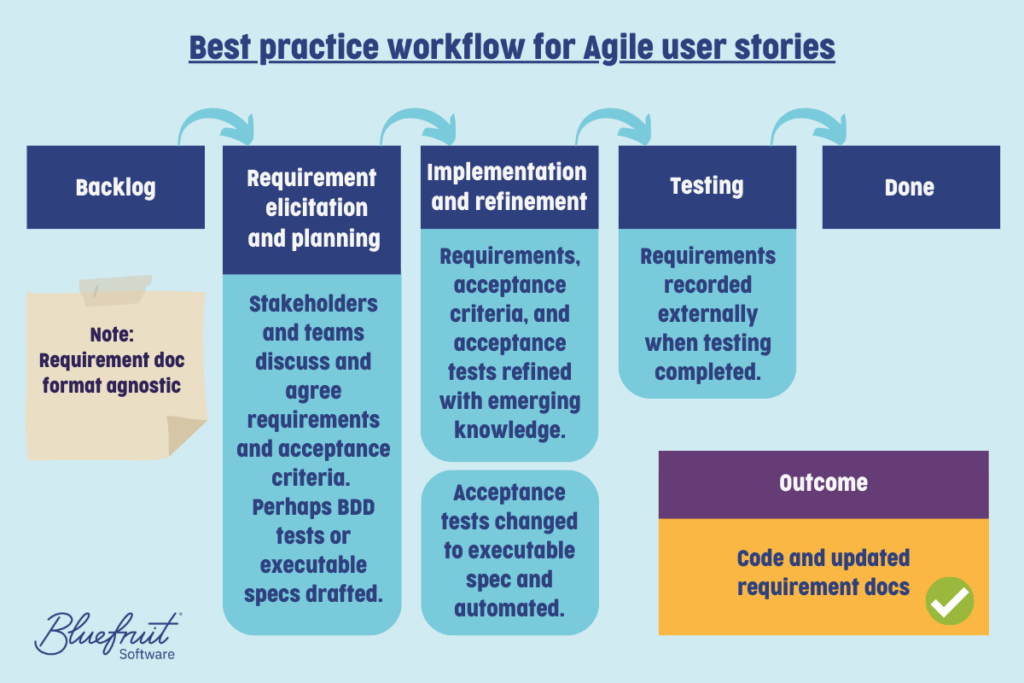

The best practice approach is not wildly different, with a couple of extra steps. Whilst requirements might be worked on in a user story, that isn’t their final resting place.

User story and requirement planning and implementation

Initially, the user story sits in the backlog as before. However, the team will elicit the requirements as part of the planning conversations. Quick feedback loops will often happen as these requirements and acceptance tests are defined, and that continues during implementation. These conversations improve development team understanding as well as requirement clarity and quality.

Specifications might be written within the user story and have the final wording refined along with the acceptance criteria as development occurs.

Executable specifications (and Behaviour-Driven Development (BDD) tests)

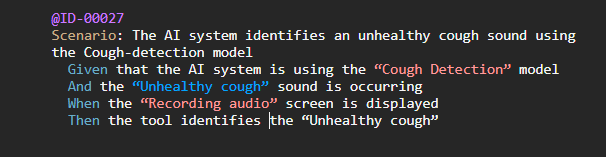

They can also take the format of executable specifications – requirements in human-readable tests that are automated by developers. In this way, the requirement documentation is auto generated when the tests run. For more information on executable specifications, see this post on Gherkin and BDD.

For example, in the following executable specification the title is the requirement text:

When development on the user story is done, an additional step takes place as part of the “Definition of Done” checklist:

![]()

Until this takes place, the user story is not considered finished. At this point, the requirements will usually be complete enough that they can be easily copied/pasted into your requirement document or requirement management tool. If you were using executable specifications, living documentation containing the requirement document can be auto-generated along with the corresponding verification test results. Living documentation is documentation that often lives within the source control and is updated along with the code. It is auto-generated as part of the build, and so always matches the implementation.

Allowing your teams to follow best practice for user stories and requirements means everyone will collaborate easier. Following a clear but dynamic separation of the two will:

- Make project knowledge sharing easier.

- Improve traceability within a project.

- Decrease the risk of documentation adding technical debt.

Overall, this means that projects will see improved quality and, in the long term, it can help with keeping costs under control.

Further reading

Find a way through

It is hard making the right choice when it comes to handling technical debt in embedded software. The choice you make depends on many factors that vary from organisation to organisation, product to product, team to team.

Should you need help assessing your course of action, Bluefruit Software can help you find what will work for you—so get in touch.

Did you know that we have a monthly newsletter?

If you’d like insights into software development, Lean-Agile practices, advances in technology and more to your inbox once a month—sign up today!

Find out more